What is it, and what does it do?

So let’s discuss this for a second. We see how Autopilot magically knows all devices there are and once they are turned on for the first time, it just know which device belong to which company. Not only that, but it just knows which user will be using this device and once user puts in correct password, not username, just password, it installs all the applications, configures keyboard, desktop background, start menu layout, installs Office and other applications, such as VPN client, PDF readers, etc., starts OneDrive sync. It also configures Antivirus, Firewall, disk encryption, … everything IT and security care about.

But what is true and what is “marketing”? As Microsoft documentation pages say about Autopilot:

“Windows Autopilot enables you to:

Automatically join devices to Azure Active Directory (Azure AD) or Active Directory (via Hybrid Azure AD Join). For more information about the differences between these two join options, see Introduction to device management in Azure Active Directory.

Auto-enroll devices into MDM services, such as Microsoft Intune (Requires an Azure AD Premium subscription for configuration).

Restrict the Administrator account creation.

Create and auto-assign devices to configuration groups based on a device’s profile.

Customize OOBE content specific to the organization.”

https://docs.microsoft.com/en-us/mem/autopilot/windows-autopilot

The truth about Autopilot. 🙂

If I simplify just a little bit here, and please do not hold this against me, Autopilot is nothing more than Unattend.XML. Yes, I know there are major differences between the two, but in the end, that’s what it does. I allows us to define which (A)AD device is to join, with what name, whether to display license terms and privacy settings screen, whether user should be administrator on device or not and what region/keyboard to set. This is pretty much a subset of Unattend.xml configurations.

So what does Microsoft mean with

Instead of re-imaging the device, your existing Windows 10 installation can be transformed into a “business-ready” state that can:

apply settings and policies

install apps

change the edition of Windows 10 being used (for example, from Windows 10 Pro to Windows 10 Enterprise) to support advanced features.

https://docs.microsoft.com/en-us/mem/autopilot/windows-autopilot

Yes. You can most definitely do all this, and we will do it, but this is, at least in my opinion, not part of Autopilot. This is all done MDM, in our case Intune. Why am I saying this? Because I can achieve the exact same result on any device that is brought under MDM using any other method. For example manual enrollment. The end result will be exactly the same.

There are several ways how we can set up Autopilot.

First we have User-driven or self-deploying. We will be using User-driven deployment. Self deploying is an interesting scenario for kiosk devices, and we’ll take a look it separately, but for our client, Kuhar LLC, User driven deployment is the one we will choose.

Secondly we can choose devices to be Azure AD joined or Hybrid joined. Here we will select Azure AD joined. We will be using Azure AD joined. In all my engagements with clients I have yet to encounter a blocking scenario for devices to ne Azure AD joined instead of Hybrid joined. There might be additional things to consider, for example having permissions on shares set to device instead of user object, but these can be easily changed and they do not outweigh the added complexity that Hybrid join brings.

With that out of the way, let’s take a look at Autopilot and set it up for our customer.

First we go to MEM admin center, https://endpoint.microsoft.com/. Please excuse my use of Intune portal in some places. 🙂

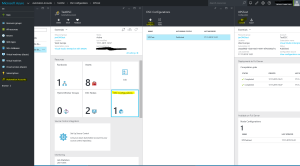

Select Devices, go to Windows and click Windows enrollment.

This opens Windows enrollment blade, where we can see several options that we will need to set during our journey.

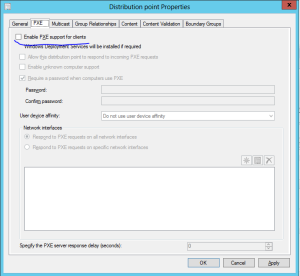

First we will create a deployment profile, so let’s select Deployment Profiles and Create profile. Under create profile, select Windows PC.

This will create new profile for us, so let’s give it a name and click Next. For now we will leave Convert all targeted devices to Autopilot to No, but that will change once we deploy the profile to a group.

Now that the profile is created, we can select all the things we talked above.

First, it’s an User-driven Deployment mode.

Second, Azure AD joined.

We will hide all the EULA and privacy settings, we will make users administrators on their devices, have them select their keyboard and region.

We will however apply a naming standard for Autopilot devices. We will name them as AP-%SERIAL%. I personally do not see much use in this, but we like to have structured names, that is how we have always done it, so why not. 🙂

Now we proceed by clicking Next. I will not be defining any scopes, at least for now, so let’s click Next to get to Assignments site, where we would be selecting a group to which we will be deploying this profile, however it has not been created yet, so let’s skip this for now as well and we’ll get back to it later. Now review the information and if we are satisfied with our settings, we can Create the profile.

So now we have a Deployment Profile created, let us create a group to which it will be assigned.

Go to Groups and Create new group. It will be a Security group, with a name that makes most sense to you, for me it will be Win10 – Autopilot Corp Devices. I like to use prefixes such as Win10- whenever I do a series of groups, policies, profiles, apps etc. This makes it so much easier to later go back and find things. Give the groups a description and select Membership type of Dynamic Device. Now click on Add dynamic query and put in the following query.

(device.devicePhysicalIds -any (_ -eq “[OrderID]:Corp”))

This query gets all devices that are added as Autopilot devices, devicePhysicalIds, and have an OrderID of Corp. This will be a tag that I will use, you can use other tags.

To add this query above, click on edit on the Configure rules page.

Now just simply Save the query, Create the group and it is done. Go back to Deployment profiles and assign it to the group we just created.

Next, we need to add a device to Autopilot. Now how do we do that?

Adding a device to Autopilot means adding some information to Intune that uniquely defines your device in the world. That information is Hardware Hash. There are two general ways of doing this. One is to extract this information from the device itself and adding it to Intune. The other way is to ask your vendor/partner to add this information into Intune for you.

Now your vendor/partner does not have to add full hardware hash to Intune, they only have to add a serial number. You might think that that is not fair. Why do you have to put there full 4K, 4096, characters for each device and vendors/partners only need to put in serial number, that is about 10 characters long. Well that is because Microsoft trusts vendors will assign information to correct clients, and if they do not it is quite easy for them to have a word with them. On the other hand, if you know how a manufacturer assigns serial numbers to devices, it is quite easy for an evil person to grab all of the serial numbers and assign these devices to themselves. Thus, preventing anyone else to claim them to their tenant, or even worse, you could deploy autopilot profiles to devices, preventing people that bought a device from using them. In short, hardware hash it is.

Ideally you want to talk to your vendor and have them add any new device you buy to autopilot. But for initial testing we will be using virtual machines and those need to be added to autopilot manually. To do this, we will be using a nifty script brought to us by Michael Niehaus. https://www.powershellgallery.com/packages/Get-WindowsAutoPilotInfo/3.5

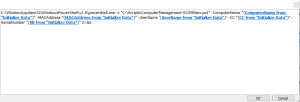

So we will start a VM with Windows installed, but not yet configured. When we get to OOBE screen, we’ll press Shift + F10, to open CMD. From CMD we’ll call PowerShell. There we first add Get-WindowsAutopilotInfo script

Install-Script -Name Get-WindowsAutoPilotInfo

Then run it using -GroupTag, -Assign and -Online parameters. This will add GroupTag we defined as a variable for our dynamic group membership. Yes I know it’s called OrderID there, which is what it used to be in Intune/Autopilot as well way back, but it got changed, just not in Azure AD, so yeah… OrderID and Group tag is one and the same. For now.

-Assign will make script wait for autopilot assignment to complete before it finishes. It can take a long time, as we are using dynamic group.

-Online parameter will add the device to Autopilot using Intune Graph API.

So we are running the following command:

Get-WindowsAutoPilotInfo.ps1 -GroupTag Corp -Online -Assign

When the script finishes, our device will be visible in Autopilot, it will be a member of Win10 – Autopilot Corp Devices group and it will have a Deployment profile assigned. When running with -Online parameter you will have to log in with a user that has permissions in Intune to add Autopilot device. When running it for the first time, you will probably be asked to grant consent to application, if that has not been done before.

So, let’s see all this really happened. Go back to MEM admin center and navigate to Devices -> Windows -> Windows Enrollment and select Devices node. This will open Windows Autopilot devices blade, where you will be able to see your Autopilot devices.

As you can see, we can see here information about the device added to Autopilot. Firstly, Serial number, we can then see it is a virtual machine, what group tag it has and whether it has a profile assigned to it or not. If all went well, you should see similar result as above.

Now that this is done, we can take a look at what does this actually do when we start the virtual machine. Restart VM and let’s see what happens.

We skip all of the OOBE screen and get directly to a screen, welcoming us to our organization.

Yes I know, I need to change the name of my directory 🙂

So, put in your username and on the next screen put in your password. Once the process finishes, you will have a machine, that is named according to our standard and is joined to your Azure AD.

So that is it. That is what AutoPilot does.

Next time, we will build on this success. Now we start playing with the real deal. Adding devices into Intune, installing applications, configurations, etc.

Until then, stay safe.